How to protect yourself from deepfake scams

Advances in AI have made it possible to create highly realistic fake audio, video, or images, called deepfakes. While this technology has legitimate uses (like art or film), scammers also use it to mimic familiar people or scenarios to obtain money or sensitive information.

In this post, we explain what deepfake scams are, how they work, and offer real-world examples. We also cover signs to look for and ways people can help protect themselves or their businesses.

What are deepfake scams?

Deepfake scams use artificial intelligence to create realistic audio, video, or images, impersonating real people, such as relatives, executives, celebrities, coworkers, or officials, to deceive victims.

These fakes can be convincing, often combining emotional manipulation, urgency, and authority to override doubts and get victims to take a particular action without thinking.

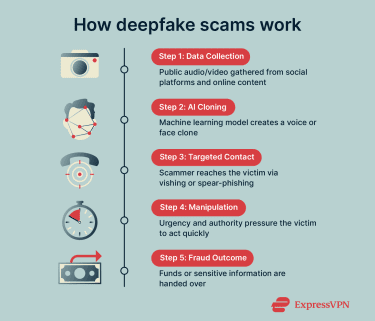

How deepfake scams work

Scammers collect publicly available or scraped audio and video samples of their target from social media, YouTube interviews, company videos, or online conferences. For voice scams, they use this data to train AI models to clone the person's voice and language style.

For video impersonations, AI-based face-swapping, lip-syncing, and voice technologies are used, where the target's face and the desired audio are fed into a deepfake tool.

Once a believable clone is ready, attackers contact the victim, often someone who knows the target, through phishing methods such as vishing (voice phishing) and spear phishing (highly targeted and personalized emails or calls).

The deepfake (voice call or video) builds credibility, making it easier to manipulate the victim into taking action, such as sharing sensitive information, approving payments, or sending money (e.g., crypto or wire fraud).

Deepfakes could also be used for identity theft and fraud if the clone is paired with stolen personal data. This might allow the bad actor to create cloned identities to bypass remote identity verification processes during account openings or loan applications, for example. While 3D facial recognition is more resistant to spoofing, digital KYC systems that rely on selfies or video selfies could be vulnerable to manipulation.

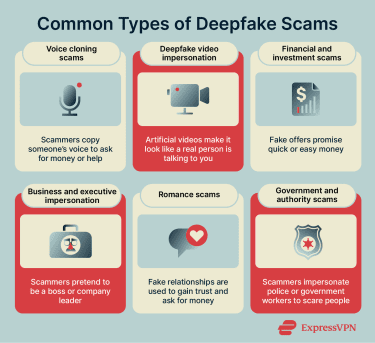

Common types of deepfake scams

Deepfake scams take on different forms and adapt to the target, context, and goal. Here are some of the main types to note.

Grandparent scams

A grandparent scam is when an attacker impersonates grandchildren or other family members. They typically create fake situations where loved ones are in distress, claiming emergencies such as accidents, arrests, or medical issues to get the target to act.

One example of using a deepfake for this scam was an 86-year-old woman from the U.S. who received a call from a person she believed to be her granddaughter. The caller said she had been detained by the police and a second person said he needed money to get her “granddaughter” out of jail.

She was told she needed to pay in cash and that someone would come to pick it up. The voice was believable and she paid the man $6,000.

Learn more: Online safety tips for seniors

Romance scams

Romance scammers build fake romantic relationships online (typically through social media), sometimes using deepfake videos, voice cloning, or AI-generated personas to make the connection feel genuine.

Following building trust with the victim for weeks or months, they typically request money for emergencies, investments, or travel.

For example, 27 people were arrested in 2024 for using deepfake technology to impersonate attractive women in video calls. The fraudsters cheated the men out of HK$360 million (USD$46 million). Regional news reported that the syndicate had created a fake trading platform and used social media and computer networks to carry out the fraud.

Fake celebrity scams

Fake celebrity scams use deepfake videos or audio of famous people.

In one widely reported case, a French woman was reportedly scammed out of $800,000 by someone pretending to be Brad Pitt. The scammer used deepfake photos and videos to convince the woman that he was in the hospital and couldn’t pay his medical bills.

Other examples exist as well, including artificial videos of Elon Musk promoting fake crypto giveaways that cost victims thousands of dollars.

These videos spread fast on social media platforms, as they rely on the trust built with fans and the size of the celebrity's online following.

Government and law enforcement impersonation

This is where scammers impersonate government authorities, law enforcement, and other prominent figures. Although not strictly required, scammers may use AI-generated voices or videosto make the scam more believable. In some cases, they may claim the victim is under investigation for crimes such as money laundering or tax fraud.

They typically build rapport with the victim before moving the conversation to an encrypted app, where they ask for payment, personal details, or account access, as noted in an ongoing scam the FBI has warned about since 2023.

Business executive and CEO fraud

Sometimes referred to as business email compromise (BEC) or whaling, this type of fraud typically targets employees who handle payments, payroll, or sensitive data, where scammers pose as a company’s CEO or CFO, for example, to gain trust and authority.

While these scams relied mostly on spoofed emails or phone calls in the past, convincing deepfake audio and video impersonations are becoming more common.

They may contact the victim by email, voice call, or video message, and create a sense of urgency, whether it's to make an emergency transfer or for a confidential deal.

These scams can lead to financial loss, unauthorized access, or credential exposure, and can be more challenging to spot in remote or hybrid workplaces, where face-to-face verification is less common. Always confirm unusual requests through established channels.

How to recognize deepfake scams

Voice cloning red flags

While scammers may only need a short audio clip to create realistic clones, AI voice scams can still have telltale weaknesses, such as:

- Tone: Lower-quality clones can sound strangely flat or emotionless, missing the usual warmth, excitement, or stress you’d expect from that person.

- Strange pauses: You might notice awkward pauses or tiny delays right before answers, almost like the AI is thinking or processing.

- Background noise: The sounds don’t match the story (for example, someone supposedly in a noisy emergency room sounds eerily quiet).

- Missing imperfections: Everything sounds too polished, rehearsed, or unnaturally clear, with no normal stumbles, filler words (“um,” “you know”), or breathing changes.

More advanced voice cloning methods can preserve the original speaker's natural tone, timing, emotions, and imperfections.

As such, often the biggest red flag is just receiving an unexpected or emotional call. Pay close attention to this, especially if it’s not from the person's usual number. The best defense is typically independent verification.

Deepfake video red flags

While signs of deepfake video scams can be hard to detect, current AI models still struggle with human subtleties. Pay close attention to these signs during any unexpected video interaction:

- Lip-sync issues: In real-time swaps, mouth movements might lag, move too smoothly, or look rubbery, especially on open vowels, such as “O” or “U”.

- Unnatural eyes: Blinking happens too rarely, too often, or not at all; eyes may appear glassy, misaligned, or stare unnaturally.

- Background/object permanence issues: In videos >10-20 seconds long, you may notice that background objects disappear or change when the person moves. Patchwork in real-time swaps often results in the loss of smaller details.

- Blurring or artifacts around edges: There's graininess or distortion around the head, hair, or when the person turns sideways.

- Face quality mismatch: In enhanced real-time swaps, the face can look unnaturally sharp or 'studio-lit' compared to the rest of the video (body, hair, or the background).

If you're on a real-time call with someone and you're unsure whether you're dealing with a real person, ask them to briefly cover part of their face with their hand or turn to the side.

If you spot these or anything feels "off", end the call. Never act on urgent requests without independent verification. Call back on a trusted number or meet in person if possible.

Behavioral and psychological red flags

Deepfake scams work by hijacking normal human reactions, like fear, trust, excitement, or love. Scammers craft messages to override caution. Watch for these patterns in any suspicious call, email, text, or video:

- Extreme urgency: They might create a false emergency that requires immediate action, encouraging victims to act impulsively.

- Heavy emotional pull: Typically, the stories can trigger fear, guilt, sympathy, or greed.

- Requests for money or secrets: They may ask for wire transfers, gift cards, crypto, passwords, or sensitive information, often with secrecy ("don't tell anyone").

- Unusual channels or pressure to switch: Contact comes from an odd number or app; they push to move to encrypted chat or avoid official verification.

- Too-good-to-be-true or dramatic story: It feels overly urgent, personal, or perfect, using details scraped from the victim's online life.

How to prevent deepfake scams

Preventing deepfake scams follows the same core principles as preventing phishing: exercise caution with unexpected requests, and verify through independent channels.

Tips for individuals

- Limit your digital footprint. Make social media profiles private and avoid posting high-quality videos or audio of yourself and your family. Less public material means fewer samples for voice cloning.

- Use a family safe word. Agree on a secret phrase or question that only family members would know.

- Verify through trusted channels. If someone contacts you unexpectedly by email when you usually communicate on WhatsApp, verify it’s really them by messaging them directly on the platform you normally use.

- Pause on urgency. Always be skeptical of unsolicited messages. Legitimate requests rarely demand instant action. Question anything that feels too dramatic or emotional.

- Strengthen security. Enable two-factor authentication (2FA) or multi-factor authentication (MFA) everywhere, especially for your email and bank accounts. Use authenticator apps over SMS and keep software updated.

Tips for businesses

- Train employees: Keep personnel up to date on how these scams work and what to watch for, and run realistic drills, especially executive impersonation scenarios, so staff know how to respond under pressure.

- Verify high-risk requests: Require confirmation through more than one channel for payments, payroll changes, or data access. Always verify using known contact details, not the ones in the message.

- Get the basics right: Use MFA everywhere, opt for phishing-resistant options, enforce strong passwords with managers, and keep systems up to date.

- Set firm rules for sensitive actions: Use dual approvals, transfer limits, and clear escalation paths. Any request to bypass normal procedures should be treated as suspicious, even if it appears to come from leadership.

- Monitor and respond quickly: Watch for unusual activity across email, calls, and meetings. Make it easy for employees to report concerns so issues can be contained fast.

What to do if you encounter a deepfake scam

While prevention is best, here's what to do if you suspect you've encountered a deepfake scam.

Stop and verify the request

If a call, video, or message feels urgent or suspicious, end the call, close the session, or disregard the message and verify through another channel. For example, call the real person back using a saved, trusted number, or check in with them in person.

Report the scam

In the United States, you can consider filing a report with the FBI’s Internet Crime Complaint Center (IC3) by clicking the File A Complaint button on the homepage or contacting local authorities. You can also report the scam to the Federal Trade Commission (FTC) by clicking Report Now.

If you live outside the US, consider contacting your local cybercrime unit or national reporting service. If any money was sent, notify your bank or payment provider immediately.

Save screenshots, emails, call logs, and recordings as evidence before deleting anything.

Protect your accounts and identity

If you may have shared personal or account information, acting fast can minimize any potential impact. Contact your bank or financial provider to help secure your accounts, flag suspicious activity, or stop transfers where possible.

Update passwords on any potentially affected accounts, enable MFA, and regularly review bank and credit card statements for any unauthorized charges.

If needed, place a fraud alert or credit freeze with the major credit bureaus. This can make it harder for anyone to open new accounts in your name.

FAQ: Common questions about deepfake scams

What are deepfake scams?

Deepfake scams use AI-generated media (videos, images, or fake audio) that impersonate real people (relatives, celebrities, or executives, for example) for fraud. These scams rely on social engineering tactics to pressure victims into taking an action, like sharing personal information or sending money.

How can I avoid AI deepfake scams?

You can lower the risk of falling victim to deepfake scams by limiting your digital footprint to reduce the amount of public data scammers can use, verifying requests through trusted channels before acting, and using layers of security like multi-factor authentication.

What is an example of a deepfake scam?

Common examples include celebrity scams, such as videos promoting fake crypto giveaways, grandparent scams, or romance scams. Other scams may include deepfake video calls impersonating executives, where employees see their "boss" on a video call asking for something, such as account access.

How can you tell if a voice or video is a deepfake?

Deepfakes are typically difficult to detect, but could show subtle flaws. Artificial AI voice clones sometimes have a flat or unnatural tone, strange pauses, missing filler words, and background noises that don't match the situation. With video, common giveaways include lip-sync issues, unnatural blinking, distorted facial features, inconsistent lighting, and visual artifacts around the person’s face or hands.

How do deepfake scams affect businesses?

Deepfake attacks present notable risks to organizations, particularly through business email compromise (BEC), executive impersonation, and fraud targeting finance or payroll teams. When successful, these scams can result in financial impacts, reputational effects, and potential data exposure. Follow these tips to help keep your business safe.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN