What is AI phishing, and how can you avoid it?

Artificial intelligence (AI) has become a part of everyday life for many people. It can assist with everything from meal planning to managing schedules and emails and can enhance the cybersecurity tools that help prevent digital attacks.

However, in the wrong hands, the same technology can improve phishing attacks by making scam messages more convincing and easier to scale. In this guide, we explain how AI is being used to improve phishing tactics, how to recognize these attacks, and what you can do to reduce your risk.

What is AI phishing?

AI phishing is a type of AI-powered scam that uses artificial intelligence to improve the quality, scale, and therefore effectiveness of fake messages. For example, AI can assist scammers in writing emails without spelling and grammar mistakes or clone voices for voice phishing (or vishing) calls.

This isn’t a new type of phishing scam to worry about; rather, it refers to how the deceptive message is created. AI phishing uses the same delivery methods as non-AI-based phishing attacks; it simply uses AI tools to increase plausibility.

The goal is still the same: to manipulate people into taking an action. This may include making a payment, clicking a link, or sharing personal information that malicious actors can later exploit.

How AI phishing attacks work

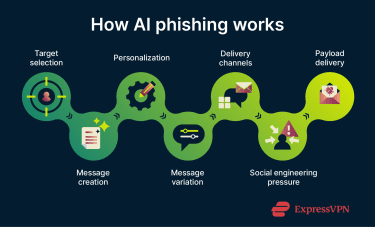

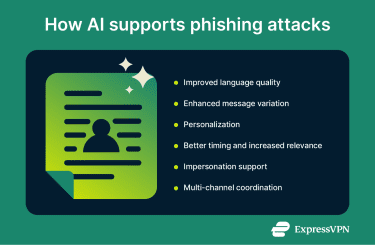

AI supports phishing attacks by making them faster to produce and harder to recognize. Instead of writing individual messages, attackers can use AI tools to create a lot of believable content and adapt it to different targets with minimal effort.

Generative AI has made it easier for attackers to create mass, multi-channel, and semi-automated phishing campaigns. Before generative AI, attackers may have written a single phishing message and then used text-spinning tools to produce multiple versions. These tools mainly reordered words or paragraphs, which often made the messages awkward and easy to spot.

AI is also enabling criminals to expand their attack vectors from email and SMS to other, more interactive mediums, such as:

- Audio creation, like voice cloning for vishing calls

- Real-time voice filters that alter how an attacker sounds during a phone call

- Deepfake video, where attackers impersonate a business contact or loved one

AI-generated emails and messages

Large language models (LLMs) can quickly generate phishing emails, text messages, and even web content designed to mimic or spoof legitimate websites. The tools don’t understand intent, so they may create scam content if prompted to do so.

One technique commonly associated with AI-generated phishing is polymorphic phishing. This refers to campaigns where each message is slightly different from the last. Each message follows the same overall scam, but small changes make them appear unique. This makes it harder for email filters to flag identical messages and can give recipients the impression that the message was written specifically for them.

Automation and personalization at scale

AI allows attackers to combine automation with personalization in ways that were previously difficult to sustain. Instead of manually researching targets or customizing messages one at a time, attackers can use AI tools to scrape social media and publicly available websites.

This may include job roles, recent events, professional relationships, or references to ongoing projects. The resulting messages feel personal even though they’re generated programmatically.

This shift removes a key limitation of traditional phishing. Campaigns no longer have to choose between reach and relevance. AI makes it possible to send large volumes of messages that still appear context-aware, increasing the odds that the target will engage without realizing the message is a phishing attack.

Impersonation using AI tools

AI-powered phishing attacks often rely on impersonating trusted individuals. Attackers can analyze communication styles, writing patterns, and publicly available information to craft messages, calls, or videos that convincingly mimic colleagues, executives, or friends and family.

Some common techniques include:

- Email that mirrors an organization’s tone, formatting, and signature style

- Voice calls using synthetic audio to imitate a known individual

- Video interactions with manipulated visuals to reinforce credibility

By maintaining consistency across channels, attackers can exploit trust more effectively, making even cautious users more likely to engage and overlook warning signs.

AI phishing vs. traditional phishing

AI phishing and traditional phishing share the same foundations. Both rely on social engineering techniques designed to manipulate people into trusting a message and taking an action, such as

- Clicking a link

- Opening an attachment

- Making a payment

- Sharing sensitive information

A traditional phishing attack typically begins with a message delivered by email, text message, or another communication channel. It often impersonates a trusted organization, such as a bank, delivery service, or government agency. The message is often written in a style that creates urgency, prompting the target to act quickly without verifying the sender.

The malicious content can be delivered via a link or attachment. Clicking a link may redirect the recipient to a spoofed website designed to capture login credentials or payment details. Opening an attachment may install malicious software on the device. In both cases, the objective is to collect information that can later be exploited for fraud, identity theft, or unauthorized access.

AI-driven phishing attacks follow the same process and have the same goals. The difference lies in how the messages are created and delivered. AI allows attackers to produce more polished, tailored, and scalable phishing content, which can make these attacks more convincing and harder to detect.

Key differences in technique

Traditional phishing often relies on blunt tactics, such as exaggerated urgency. Messages may include alarming language or generic requests that make them easier to spot. AI-assisted phishing, on the other hand, tends to be more restrained. Messages are often written in a professional tone and apply time pressure in a way that feels plausible rather than extreme. Instead of urgent warnings like “your account will be deleted,” a message may reference a routine deadline or an internal request that appears time-sensitive but ordinary.

AI can also help attackers position messages more carefully. Rather than impersonating senior executives directly, messages may appear to come from someone just high enough in an organization to make a request seem reasonable. This reduces suspicion while still leveraging perceived authority.

Another difference is coordination. Some AI-assisted phishing campaigns combine multiple communication channels. A recipient might receive an email referencing a prior conversation, followed by a text message or phone call reinforcing the request. Each step builds on the last, creating the impression of continuity.

Despite this added polish, these attacks can still lack important human context. Legitimate requests usually include background or reasoning. Messages that push for action without providing meaningful context remain a key warning sign, regardless of how well written they are.

How AI changes scale and targeting

One of the primary ways AI affects phishing is by reducing the time and effort required to create convincing messages. For example, an IBM X-Force experiment compared phishing emails generated by an LLM with ones crafted by the company’s red team. In that test, the AI tool generated a tailored phishing email in about five minutes, compared with roughly 16 hours of human effort to produce a similar message. The AI-generated message performed with comparable effectiveness, even though it was created in just a fraction of the time.

AI also enables attackers to increase volume and variation. Instead of spending hours refining each message, they can use AI to produce numerous versions with natural phrasing, making detection by basic email filters more difficult. The time saved can also be used to gather and incorporate publicly available target information, enhancing the relevance and personalization of each message.

Why traditional phishing still exists

Despite these advances, traditional phishing is still commonly used. It’s proven to work, is relatively easy to execute, and is inexpensive to run. When targeting large audiences, attackers don’t need sophisticated tooling or highly tailored messages, because sending high volumes of generic emails can still produce results even if only a tiny percentage of recipients respond.

In practice, attackers frequently combine approaches. Some campaigns rely on basic phishing techniques, while others incorporate AI-assisted elements to improve quality or reach. The continued use of traditional phishing reinforces an important point: AI hasn’t changed the goal of phishing attacks, only how efficiently they can be carried out.

Warning signs of AI phishing

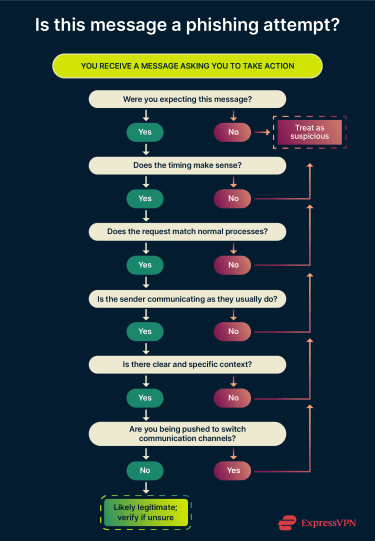

Spotting AI phishing attacks depends on paying attention to context, behavior, and inconsistencies across communication channels. Here are the key aspects to review carefully if you’re unsure about a communication you’ve received.

Unusual timing or context

A message may arrive outside normal working hours, during holidays, or at a time when the sender is known to be unavailable.

Context is also important. Watch out for messages that reference projects, transactions, or conversations you were never involved in, seem “coincidentally” relevant but come from an unfamiliar sender or unexpected channel, or contain just enough real information to sound credible but lack meaningful detail or background.

Individually, these signs don’t confirm a phishing attempt. However, when unusual timing is combined with unclear context or pressure to act quickly, it’s a strong indication that the message should be verified through a trusted, independent channel.

Requests that bypass normal processes

Many AI phishing attacks attempt to shortcut established procedures. Messages may ask for immediate payments, urgent data sharing, or confidential actions that avoid standard approval steps.

Requests that discourage verification or suggest skipping routine checks should be treated with caution, even when they claim to come from senior staff, vendors, or trusted organizations.

Pressure to move conversations off normal channels

Attackers often try to shift communication away from official or monitored platforms. A message may ask you to continue a conversation through personal email, messaging apps, or phone calls instead of established workplace systems.

This tactic reduces visibility and increases the attacker’s control over the interaction. Sudden channel changes, particularly when paired with urgency, are a common warning sign.

Voice or message mismatches with known behavior

Even highly convincing AI-generated messages can contain subtle inconsistencies. These may include changes in tone, phrasing, or communication style that don't match how the sender normally writes or speaks.

With voice- or video-based attacks, mismatches may involve unusual word choices, unnatural pauses, or behavior that feels slightly out of character. Trusting your intuition and familiarity with how people usually communicate can be an effective way to identify suspicious interactions.

How to protect yourself from AI phishing

The most effective defenses against AI phishing focus on verification, awareness, and habits that reduce the impact of increasingly polished scam messages.

Verifying senders and requests

When you receive an email, message, or call that asks you to click a link, download a file, or take any other action, pause before responding.

Start by asking a few simple questions:

- Was I expecting this message? Legitimate communications usually arrive in context. If someone sends a file-sharing link, you likely knew it was coming. Unexpected requests deserve extra scrutiny.

- Does this match how this person normally communicates? A colleague who usually sends casual messages suddenly writing in a formal tone can be a warning sign. A vendor who typically calls before making requests asking for sensitive information by email may also be acting out of character.

- What exactly is being asked? Legitimate requests tend to include clear context. A message referencing a specific meeting or ongoing task is more credible than a vague request to review an “important document.”

A quick SLAM verification can also help:

- Sender: Check the full sender email address, not just the display name.

- Links: Hover over links to see where they actually lead.

- Attachments: Examine attachments for unusual file types or naming conventions.

- Message: Read the message for consistency in tone and details.

Consider whether the request makes sense given your relationship with the sender and if something feels off, don't click. Being cautious may cost you a few minutes of clarification, but being careless can lead to far more serious consequences.

Limiting personal data exposure

AI phishing attacks often rely on publicly available information to make messages feel personal. Improving your online privacy and security includes limiting how much information you share online, which prevents attackers from finding and using it against you.

Review privacy settings on your social media accounts, avoid oversharing professional details, and be cautious about posting information related to travel, work responsibilities, or internal projects. The less context attackers have, the harder it is for them to craft convincing messages.

Using security and privacy tools

Security and privacy tools can provide an additional layer of protection. Browser-based protections and security extensions can warn you when you’re about to visit a suspicious site. For example, ExpressVPN’s Threat Manager helps block your device from communicating with domains associated with known malicious activity.

Password managers also offer a useful defensive signal. They only autofill credentials on the websites you have saved for your logins. If you land on a fake login page and your credentials don't autofill, that inconsistency can alert you to a potential phishing attempt before any information is submitted.

Staying informed about phishing tactics

Phishing techniques continue to evolve. Staying informed about common scam patterns helps you recognize suspicious behavior before it causes harm.

For individual users this means:

- Following reputable cybersecurity blogs or alerts from vendors.

- Subscribing to phishing simulation newsletters or periodic test emails to practice spotting scams.

- Reviewing recent news about scams targeting your region or industry.

- Discussing unusual messages with colleagues or friends before taking action.

For organizations, it involves:

- Sharing real-world examples of attacks and simulating phishing campaigns to reinforce learning.

- Maintaining an internal reporting process for suspicious messages so employees can verify and escalate potential threats.

- Keeping up with threat intelligence reports and vendor alerts to understand new tactics targeting your sector.

Why no single tool stops AI phishing

No single tool can fully stop AI phishing. Modern email platforms rely on machine learning (ML) and behavioral analysis to evaluate incoming messages, assessing factors like sender reputation, domain age, link destinations, message structure, and how closely the content resembles known phishing or legitimate communication patterns. These systems can block a large proportion of malicious emails before they reach inboxes, but they’re not foolproof.

Link analysis services can also provide an additional layer of protection. Tools like VirusTotal or PhishTank let users and security teams analyze suspicious URLs or attachments without interacting with them directly, helping identify destinations associated with known phishing or malware campaigns.

However, attackers increasingly use AI to generate polymorphic phishing campaigns, where each message is slightly different in wording, formatting, or context. This reduces the effectiveness of signature-based and pattern-matching detection. As a result, the most effective defense combines layered technical controls with human judgment and verification habits.

FAQ: Common questions about AI phishing

What are the most common types of phishing today?

Email phishing remains the most common form of phishing by volume, largely because it allows attackers to reach large numbers of targets quickly and at low cost. Most phishing campaigns still begin with an email that impersonates a trusted organization and attempts to lure the recipient into clicking a link, opening an attachment, or entering credentials.

Can AI be used to detect phishing attacks?

There are AI tools that can make it easier to detect a phishing attempt. Email security platforms and spam filters rely on machine learning (ML) to analyze message patterns, sender behavior, and link characteristics to identify suspicious activity. However, detection tools aren’t foolproof. As attackers use AI to create more varied and context-aware messages, some phishing attempts can evade automated systems.

Are businesses more at risk from AI phishing than individuals?

Businesses are often attractive targets because phishing attacks can lead to financial fraud, data breaches, or unauthorized access to internal systems. AI phishing can increase this risk by making impersonation of executives, vendors, or internal teams more convincing. However, AI scams involving account alerts, deliveries, or payment requests frequently target individuals as well.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN