Popular AI Generator Exposed Over One Million Images Including DeepFakes and Nudify Face Swaps

Cybersecurity Researcher Jeremiah Fowler uncovered a data leak affecting an AI image generator tool, and shared his findings with ExpressVPN. We are publishing his report to help the public stay informed and protected as part of our ongoing effort to highlight important security risks.

The publicly exposed database I discovered was not password-protected or encrypted. It contained over a million images and video files (1,099,985 records). In a limited sample of the exposed documents I reviewed as part of the investigation, nearly all of the files I observed were pornographic images, including what appeared to be AI-generated or manipulated portrayals of very young individuals. These included face-swapped images of the faces of adults and young individuals on AI-generated bodies. What is even more concerning is that I also observed a number of what appeared to be unaltered images of real individuals included in the dataset, which were possibly uploaded as references and I assume without the individuals’ knowledge or consent.

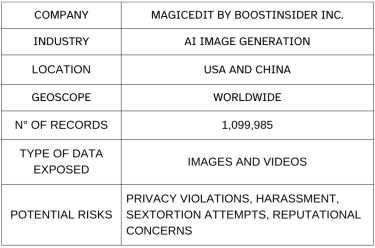

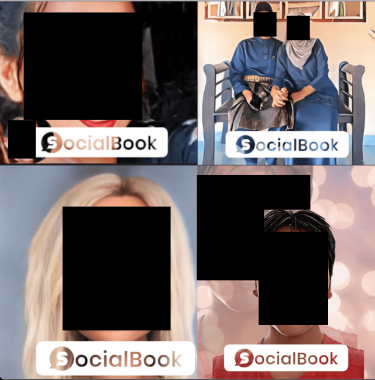

The name of the database and its internal watermarked images indicated they belonged to a company called SocialBook. It is based in Silicon Valley and offers a range of services for influencers and marketing, including AI image- and content-generation tools. While reviewing SocialBook’s AI image-generation feature, we identified a link redirecting to MagicEdit dot app. At the time of publication, MagicEdit appeared in Apple’s App Store under the developer name BoostInsider Inc., which we have since learned is dissolved. MagicEdit and DreamPal are operated by DreamX Inc., and are not affiliated with SocialBook.

Once I confirmed the owner and veracity of the leak, I immediately sent a responsible disclosure notice to SocialBook and MagicEdit, and the database was restricted from public access and no longer accessible. I received a reply stating “Thank you for this responsible disclosure. We take this extremely seriously and we are conducting a full investigation into the scope of the exposure”. Although the records belonged to MagicEdit, it is not known if the database was owned and managed directly by them or by a third-party contractor. It is also not known how long the database was exposed before I discovered it or if anyone else may have gained access to it. Only an internal forensic audit could identify additional access or potentially suspicious activity. In the time between the disclosure notice and the publication of this report the MagicEdit website is not accessible and the apps have been removed and are no longer available.

MagicEdit is an AI-powered image-generation platform that enables users to transform text descriptions or upload pictures to create unrestricted or adult-themed images, face-swap images, remove backgrounds, and more. The App Store description states it is intended for users who are over 18 and contains a warning that there may be sexual content or nudity. Based on the files I saw in a limited sample, an overwhelming majority of the images were explicit and depicted adult content. According to their website, MagicEdit supports multiple artistic styles, such as realistic, anime, cartoon, fine art, and abstract. The app is monetized through coins and subscriptions. The app allowed users to login and see their own images, but the way the database was exposed anyone could see other users’ images with nothing more than an internet connection and a web browser. This exposure raises privacy concerns as more personal data and images are collected, stored, and could be potentially misused across digital platforms.

This screenshot is a collage of images that depicted either what appeared to be very young individuals who were fully clothed or adult faces composited onto the bodies of individuals who appeared to be minors. Although there is no nudity, these images have been redacted to conceal fully clothed body parts and to protect the privacy of real individuals whose likeness or representation may be depicted.

This screenshot shows a collage of redacted images that appear to portray real individuals or reference source images for MagicEdit’s tools and services.

This image is a collage of screenshots that show redacted pictures with a SocialBook watermark.

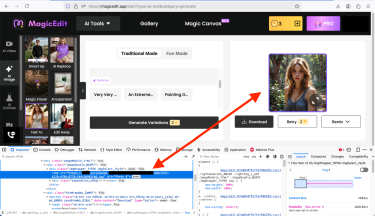

This screenshot shows a text-to-image product generated by MagicEdit. It also shows an open-source page inspection browser addon that details both the storage URL and hosting location of the generated image.

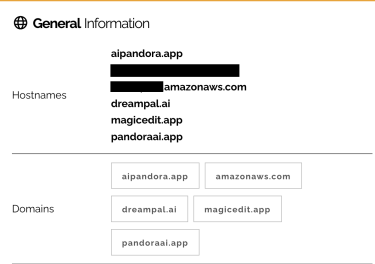

This screenshot from a public IoT engine shows the host names and domains that share the same IP address as the MagicEdit app.

There are a wide range of AI image generators that claim to produce sexually explicit content from text prompts or user-submitted images. These programs can easily learn and pull from the abundance of adult material available online. Any tool that enables AI-powered face- or body-swapping without a person’s knowledge or consent creates significant ethical, privacy, and potentially legal concerns. AI-generated or face-swapped images that depict nudity (commonly referred as “deepfake pornography” or “nudified images”) can be weaponized for blackmail, harassment, or revenge.

In recent years, the technology used to create these images has drastically improved, making it easy for users with no advanced skills to generate highly realistic images. Nonconsensual deepfake content has become a serious issue, and many states have enacted laws to protect citizens from their images being used without their consent to make deepfake adult content. According to a public citizen tracker (a site that monitors state legislation on intimate deepfakes), there is a gap between the rapid growth of technology and legislation. Some reports estimate that 96% of all deepfakes online are pornographic, and that 99% of these involve women who did not consent to their likeness being used to create sexually explicit content.

The exposed database held numerous files that appeared to be explicit, AI-generated depictions of underage individuals and, potentially, children. While MagicEdit’s terms of service include an Account Termination Policy that grants the right to terminate user accounts for pornographic content, there is no express mention identified that covers nonconsensual content or images of minors generated through their platform. Additionally, section 5 of MagicEdit’s privacy policy states that images of real users’ faces will not be saved to the cloud. It is not known if the database was owned or managed by a third party or a contractor.

As an ethical researcher, I do not download, retain, or screenshot illicit or potentially illegal files or images. Moreover, I am not claiming that the individuals depicted did not provide their consent for their likeness to be used with the MagicEdit platform, nor am I saying these individuals are at risk of extortion, harassment, or any forms of misuse. I am only providing a real-world risk scenario of the broader landscape of AI-generated explicit images and the potential risks they could pose.

To reduce the risk of personal or private images being stolen or misused by AI tools, I would recommend making social media profiles private so only friends, family, or known contacts can view posts and images. It is also a good idea to disable public visibility of profile pictures, albums, and tagged photos, and review followers and contacts to make sure you know who has access to your personal content. As a general rule: avoid sharing photos of children, family members, and others on public platforms without their informed consent. Even the most innocent images can be scraped or stolen and then manipulated, by taking proactive steps to secure images it can add another layer of privacy. Additionally, remove location data and geotags from images that can reveal home, work, school, or other locations that you or others may not want to be publicly exposed.

For individuals who may believe they are the target of any type of sextortion attempt or harassment, there are now laws that can help remove nonconsensual images and content. For example in the United States, The Take It Down Act (S.146) was signed into law on May 19, 2025, making it a federal crime to knowingly publish or threaten to publish nonconsensual intimate images, including AI-generated or digitally altered “deepfakes.”

To my understanding, the law also requires major online platforms to establish notice-and-takedown systems and to remove reported intimate images and identical copies within 48 hours. While the criminal provisions took effect immediately, platforms have until May 2026 to fully comply with the takedown requirements. My advice to anyone who receives threats or identifies images used without their consent is to contact law enforcement and notify the website or platform where the image appears.

I imply no wrongdoing by MagicEdit, SocialBook, BoostInsider Inc., or any contractors, affiliates, or related entities. I do not claim that internal, customer, or user data was ever at imminent risk. The hypothetical data-risk scenarios I have presented in this report are strictly and exclusively for educational purposes and do not reflect, suggest, or imply any actual compromise of data integrity or illegal activities. This report should not be construed as an assessment of, or a commentary on any organization’s specific practices, systems, or security measures.

As an ethical security researcher, I do not download the data I discover. I only take a limited number of screenshots when necessary and solely for verification and documentation purposes. I never screenshot, save or download any material that may be illicit or potentially illegal. I do not conduct any activities beyond identifying the security vulnerability and notifying the relevant parties. I disclaim any and all liability for any and all actions that may be taken as a result of this disclosure. I publish my findings to raise awareness of issues of data security and privacy. My aim is to encourage organizations to proactively safeguard sensitive information against unauthorized access.