Rate limiting for network security: What it is and how it works

Any system exposed to the internet can face surges in traffic, from malicious attacks aimed at disrupting services to automated requests that drain resources. Even legitimate users can unintentionally contribute to overload during sudden spikes in demand. Rate limiting serves as a safeguard, controlling the rate of incoming requests to help maintain system stability and prevent abuse.

This article explores how rate limiting works, why it matters for defending networks, and where it fits into real-world security strategies.

What is rate limiting?

Rate limiting is a network control technique that restricts the number of requests a user, application, or system can make to a server within a specified time frame. By defining these boundaries, it helps ensure that traffic stays within the system’s capacity and prevents overload.

Rate limiting is closely related to request throttling. Request throttling is when a system gradually slows down requests as usage approaches a limit rather than outright blocking them. Both approaches aim to control traffic and protect systems from overload or abuse, but request throttling still allows all requests to reach the server, so it offers less protection against overload and malicious activity.

Why is rate limiting important?

Rate limiting is a key security measure that helps defend systems against malicious activity that relies on sending large volumes of requests. This is especially important for small businesses as popular targets for cybercriminals.

A good way to understand its importance is through two-factor authentication (2FA). If a system allows unlimited attempts to enter a six-digit code, an attacker could easily try thousands of combinations in a short time, making the code ineffective. Rate limiting prevents this by restricting the number of attempts, maintaining the integrity of security mechanisms like 2FA.

Beyond security, it also ensures that servers remain stable and responsive under heavy traffic, providing fair access to legitimate users.

Protects against cyberattacks

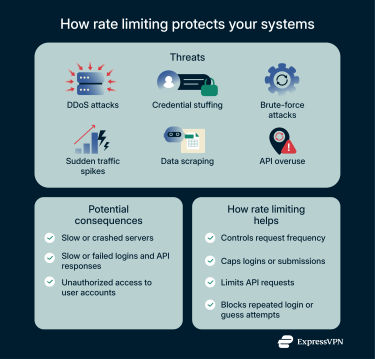

Here are some common types of malicious activity that rate limiting can help mitigate:

- Distributed denial-of-service (DDoS) attacks: These occur when threat actors generate a massive volume of requests to a server or network, often using bots, with the intent of causing slowdowns or outages. Rate limiting provides DDoS protection by restricting the number of requests allowed per source within a set time frame.

- Credential stuffing: After cybercriminals acquire a large list of stolen user credentials from a compromised database, they may use bots to try the same username and password combinations on other websites or services, taking advantage of users who reuse passwords across multiple accounts. Rate limiting helps defend against credential stuffing by restricting the number of login attempts, slowing or blocking automated login attempts.

- Brute-force attacks: Like credential stuffing, brute-force attacks involve repeated login attempts, but in this case, attackers don’t rely on stolen credentials. Instead, they systematically guess passwords or cryptographic keys using automated tools. By blocking repeated login attacks, rate limiting also provides brute-force attack prevention.

Prevents abuse and overloading

Rate limiting also protects systems from unintentional overload caused by legitimate activity, such as:

- Sudden traffic spikes: During peak usage periods, multiple users may try to log in at the same time or submit forms such as registrations, ticket requests, or support inquiries. Repeated requests due to slow responses or accidental resubmissions can compound the issue.

- Data scraping: This occurs when someone uses automated scripts or bots to systematically extract large amounts of data from a website or API. Even if the intent isn’t malicious, the excessive requests can overload servers and slow down legitimate traffic.

- API overuse: Each API request consumes server resources, and excessive or high-frequency requests, whether due to popular usage, software bugs, or misconfigured scripts, can strain servers and slow responses for other users.

Types of rate limits

Rate limiting can be applied in different ways depending on what kind of traffic needs to be controlled. The most common categories are user-based, geographic, and API rate limits. Each approach has its own role in managing load, preventing abuse, and enhancing network security.

User rate limits

User rate limits place restrictions on how many requests a user can make within a defined time window. Requests are often tracked using identifiers such as the user’s account or their IP address.

Here are some examples of user rate limits in action: a social media platform might limit each user to 50 posts per day to prevent spam, while a search engine could restrict users to 30 queries per minute to stop data scraping without interfering with normal activity.

If desired, a service can use IP whitelisting to exempt specific users or systems from standard rate limits, allowing trusted IP addresses to access resources without restriction while still managing overall traffic.

Geographic rate limits

Geographic rate limits apply different request thresholds depending on where the traffic originates. Instead of blocking requests entirely, systems use IP geolocation to identify a user’s region and then adjust rate limits accordingly.

For example, a service might allow higher request volumes from regions with a strong user base or reliable traffic history while applying stricter limits in areas that have shown higher levels of automated or fraudulent activity.

API rate limits

API rate limits control how many requests a user or application can make to an API within a specified time period. For example, a weather service API might only allow each developer key to request up-to-date weather data 1,000 times per day. This prevents any single integration from overloading the service while ensuring other developers can continue accessing the API reliably.

How does rate limiting work?

Rate limiting relies on specific algorithms and enforcement techniques. The following sections outline the most common algorithms and practical methods for implementing them.

Common algorithms used

Different algorithms determine how requests are counted and limited. Choosing the right algorithm depends on many factors, including the type of traffic, desired precision, and system performance requirements.

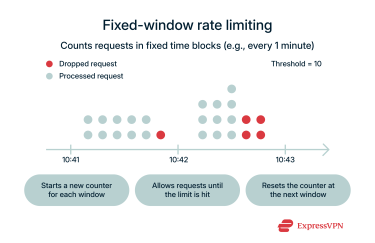

Fixed-window rate limiting

Fixed-window rate limiting counts requests in set intervals, like one minute or one hour. For example, a ticketing website might allow each user 10 purchases per hour on the dot. If a user hits the limit at any time between 9:00 and 10:00, whether at 9:02 or 9:57, they must wait until after 10:00 before making more requests.

This approach is simple and works well for steady traffic, but it can create bursts at window boundaries. For instance, users who hit the limit just before the hour resets can immediately make a new batch of requests, potentially creating a sudden spike in server load.

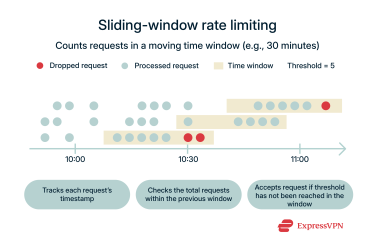

Sliding-window rate limiting

Sliding-window rate limiting counts requests over a moving time frame that updates continuously. For instance, a ride-hailing app might allow 30 location updates in any 10-minute period. If a driver makes a request at 12:05, the system counts all requests from 11:55 to 12:05. Five minutes later, at 12:10, the window would count requests from 12:00 to 12:10.

This continuous tracking spreads requests more evenly and avoids the sudden surges that occur at fixed-window boundaries, but it’s more complex to implement.

Implementation techniques

Once a rate-limiting method or algorithm is chosen, it must be enforced effectively through a rate-limiting policy. This policy defines the rules and thresholds that determine how many requests are allowed and how the system responds when limits are reached.

Common elements of a rate-limiting policy include:

- Request per second limit: Caps the number of requests a user or system can make per second to prevent sudden spikes from overwhelming servers.

- Concurrent requests limit: Restricts the number of simultaneous requests a client can make at a given time, helping ensure fair resource allocation.

- Authentication rate limit: Applies limits specifically to login attempts or other sensitive operations to prevent brute-force attacks and credential stuffing.

When a user exceeds these limits, the system may respond with a “rate limit exceeded” message and temporarily block further requests, throttle traffic to slow request rates, or queue excess requests for later processing. These enforcement methods, combined with the rules defined in the rate-limiting policy, help maintain server performance, prevent abuse, and ensure fair access for legitimate users.

Real-world applications of rate limiting

Rate limiting has practical uses across industries. Here are a few examples of how it can be applied.

API rate limiting in e-commerce

E-commerce platforms rely on APIs for functions like product searches, order processing, and payment handling. High volumes of API requests, whether from integrations, automated tools, or high user traffic, can strain servers and slow down services.

Rate limiting helps prevent this by capping the number of requests allowed per user, integration, or system within a set period. For example, Shopify’s Storefront API limits the number of checkouts that can be created per minute, ensuring the platform remains responsive during peak periods and protecting against automated abuse. E-commerce platforms may also limit requests for product or inventory data to prevent unauthorized scraping of pricing and stock information.

Customer relationship management extensions

Many customer relationship management (CRM) systems allow multiple third-party applications to integrate via APIs, such as marketing platforms, support chatbots, or analytics tools. These integrations can generate high volumes of API requests, especially during peak business hours or automated campaigns.

To maintain system performance and prevent overload, CRMs implement rate limiting. For example, Zendesk enforces limits on the number of API calls per minute or per day depending on the license level. Some CRMs also restrict the number of simultaneous operations for certain API actions, ensuring fair resource allocation across users and integrations.

Rate limiting in content delivery networks (CDNs)

Content delivery networks (CDNs) distribute website content, such as images, videos, and scripts, across multiple servers to reduce load times and improve reliability. Given the high volume of requests they handle, CDNs are vulnerable to various forms of abuse, including bot traffic and automated scraping.

Implementing rate limiting at the CDN level helps mitigate these risks by controlling the frequency of requests from clients. For example, CDNs can limit the number of requests from a single IP or range and also apply rate limits based on request types. This ensures that malicious or excessive traffic is slowed or blocked, while high-priority requests can continue.

Rate limiting is often used alongside Domain Name System (DNS) protection or managed DNS to help maintain reliable domain resolution, so users can consistently reach content without disruption.

Authentication rate limiting in large language models (LLMs)

You’re likely to encounter rate limiting when accessing platforms powered by large language models (LLMs), such as ChatGPT. These platforms limit the number of requests a user can make within a certain time frame, whether per minute, per hour, or per session.

This type of rate limiting protects the system from overload, prevents abuse, and ensures a fair experience for all users. For instance, limiting how often a user can submit prompts or requests ensures that the platform remains responsive, even during periods of high demand.

FAQ: Common questions about rate limiting

What is the meaning of rate limiting?

Rate limiting is a network control mechanism that restricts how many requests a user, application, or system can make to a server within a set period. It ensures that traffic doesn’t overwhelm system resources, helping maintain stability and fair access.

What is an example of a rate limit?

An example of a rate limit is an API allowing 100 requests per minute per user. This prevents any single user from overloading the server. Another example is an e-commerce platform limiting the number of checkout attempts per minute to keep the site responsive during peak shopping periods.

What is meant by the rate-limiting factor?

A rate-limiting factor is the rule or parameter defined in a rate-limiting policy that sets how many requests a user, system, or application can make within a specific time frame. For example, an administrator might set a limit of 50 API calls per minute per user.

How does rate limiting improve security?

Rate limiting improves security by controlling how quickly users or systems can make requests, which directly reduces the ability of attackers to overwhelm services or exploit automated tools. For example, limiting login attempts thwarts brute-force or credential stuffing attacks, while capping API calls prevents automated scraping that could disrupt normal users.

What are the types of rate limits?

The main types of rate limits include user-based, geographic, and API limits. User-based limits restrict the number of requests an individual user or account can make. Geographic limits control requests based on location. API limits cap the number of calls an application or integration can make to an API.

How does rate limiting affect performance?

Properly implemented rate limiting maintains system performance by preventing overload, ensuring servers remain responsive even during traffic spikes. It balances access for legitimate users while controlling abusive or excessive requests.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN