What is a neural network, and how does it work in AI?

A neural network is a machine learning method that allows artificial intelligence (AI) models to process data in ways loosely inspired by the structure of the human brain. This helps them recognize patterns and generate outputs such as predictions or classifications based on that data.

In this article, we’ll introduce neural networks, explain how they work, explore their role in AI, and look at their main types, applications, and ethical considerations.

Introduction to neural networks

Neural networks are among the most widely used approaches for training AI models. They play a central role in many modern AI systems, especially those that involve recognizing patterns in large amounts of data, such as:

- Computer vision: Neural networks are one of the primary model types used in computer vision, where they help AI systems process and analyze visual data like images and videos.

- Speech recognition: Neural networks can process large and complex datasets for speech recognition, which can improve accuracy.

- Natural language processing (NLP): Neural-network-based deep learning is a leading approach for building NLP systems, enabling AI models like ChatGPT and DeepSeek to analyze and interpret human language more effectively.

- Facial recognition: Neural networks are commonly used in facial recognition technology, which is now part of billions of smartphones around the world.

What is a neural network?

Neural networks are a type of machine learning model that allows AI systems to learn from data without being explicitly programmed for every task. Neural networks use interconnected units, often called neurons or nodes, organized in layers to process information and identify patterns.

How do neural networks process information?

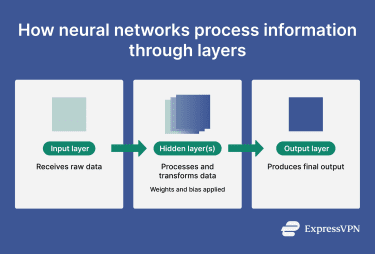

Neural networks process information through interconnected neurons, which receive, transform, and interpret data to make predictions or classifications. Neural networks are typically (but not always) organized into three main layers:

- Input layer: Receives the raw data that the neural network will process, such as numerical representations of images, text, or sensor data.

- Hidden layer: Processes data from the input layer and transforms it into intermediate representations used by the output layer. Some neural networks don’t have any hidden layers, only input and output layers.

- Output layer: Produces the final output of the network, such as a prediction or classification.

Connections carry numerical information from one layer to the next, linking the output of one neuron to the input of another. Weights control how strongly one neuron’s output influences the next, while biases are added as extra values that help shift the final output. Conceptually, it looks like this: Neuron A → Weight → Neuron B (adds bias) → Output.

Neural networks vs. traditional algorithms

Neural networks differ from traditional algorithms in four key ways:

- Non-linearity: Standard algorithms often rely on predefined, linear rules (if X happens, then do Y), which is sufficient for predictable problems. Neural networks build more complex, non-linear relationships where the patterns are more variable (such as image recognition or speech processing). This non-linearity is enabled by activation functions within the network’s layers, which allow it to capture patterns that simple linear combinations of inputs couldn’t. Without activation functions, a neural network would be effectively linear, regardless of its depth.

- Learning from data: While traditional algorithms require written rules and fixed instructions, neural networks use data training to adjust their parameters and improve over time.

- Generalization: Traditional algorithms are designed for specific scenarios and often perform poorly in situations where other rules apply. Comparatively, neural networks are trained to identify underlying patterns and generalize on unfamiliar data, handling real-world variability more effectively.

- Transparency: Traditional programming is usually easier to trace and debug, whereas neural networks are more complex and often need explainable AI to understand outputs, diagnose errors, and model behavior.

Neural networks, machine learning, and AI

Machine learning and neural networks are closely related concepts, but they describe different subfields in the AI industry. Understanding how they fit together helps clarify how modern AI systems are built and trained.

How neural networks fit into machine learning

Neural networks are a subset of machine learning, which enables AI models to learn patterns from data rather than relying on explicitly programmed rules. Essentially, a neural network is a specialized form of machine learning.

Compared to many traditional machine learning methods, which often rely on manually designed features, neural networks can learn directly from data. During training, they adjust their internal parameters to minimize errors, which makes them better suited for complex tasks involving large and variable datasets.

Neural networks vs. deep learning vs. AI

A neural network is a machine learning model that uses interconnected layers to process data, while a deep learning model is a type of neural network that uses multiple hidden layers. This allows it to learn more complex patterns from large datasets. In practical terms, this means:

- A standard neural network might have one input layer, one hidden layer, and one output layer.

- A deep learning neural network has one input layer, multiple hidden layers, and one output layer.

This structure allows deep learning models to handle complex tasks (like language modeling or image recognition) more effectively than regular models.

Types of neural networks

Neural networks can be grouped into several common types.

Feedforward neural networks (FNN)

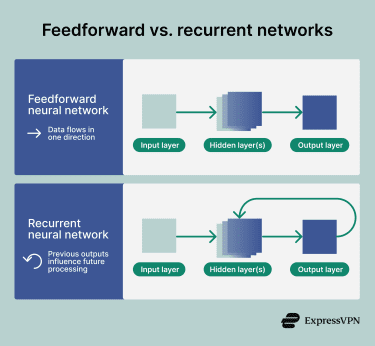

A feedforward neural network (FNN) is a neural network where data moves from input to output in one direction, without any feedback connections or loops. This means the network doesn’t have memory of previous inputs, and each input is processed independently.

Convolutional neural networks (CNN)

Convolutional neural networks (CNNs) are designed for processing visual data such as images. They use a convolutional layer (part of an artificial neural network that looks for specific patterns in data) to learn image features, such as edges and shapes, which makes them well-suited for tasks like image recognition, classification, and other computer vision applications.

Other common applications of CNN-driven computer vision include marketing (social media platforms identifying people in photographs), retail (some e-commerce platforms have added visual search features), and healthcare (radiology technology).

3. Recurrent neural networks (RNN)

Recurrent neural networks (RNNs) are designed for sequential data processing where earlier data impacts the interpretation of future data. Common use cases include speech recognition, text and language processing, and time-series data analysis.

RNNs use feedback loops to maintain a short-term form of memory, allowing information from earlier inputs to influence how later inputs are processed. This short-term memory creates a larger context for the input-to-output process.

4. Transformer neural networks

Transformers are a newer neural network architecture designed to handle sequential data, especially language. Compared to earlier sequence models like RNNs, transformers process entire sequences at once and use attention mechanisms to identify relationships between different parts of the input.

An attention mechanism is a way for a model to decide which parts of the input it should focus on when processing a specific word/token. Instead of reading a sentence strictly left to right like an RNN, a transformer can look at all words at once and ask: “For this word, which other words in the sentence matter the most?”

This allows transformers to track context across long data sequences, generate more accurate outputs, and model relationships based on how words relate to each other. Thanks to this, transformers are among the primary drivers of modern NLP models, machine translation, and speech recognition.

5. Generative adversarial networks (GAN)

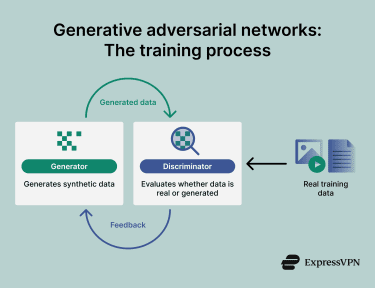

Generative adversarial networks (GANs) are made up of two neural networks trained together in opposition:

- A generator that creates synthetic data

- A discriminator that evaluates whether the data generator’s data is real or generated

The aim of GANs is to produce increasingly realistic data that can pass as genuine. During training, both neural networks improve; the generator produces increasingly realistic data, while the discriminator improves its ability to distinguish fake from real data. For instance, AI models that attempt to replicate art pieces or the style of a painter often use GANs.

The discriminator learns in a standard way, trying to minimize the error when predicting whether an image is real or generated. The generator is trained simultaneously using the discriminator’s feedback: it adjusts its weights to maximize the discriminator’s error, producing outputs that are harder to distinguish from real data.

Both networks are trained using a single loss function, which balances the generator’s goal of fooling the discriminator with the discriminator’s goal of correctly identifying real data.

After training, the discriminator is usually no longer needed, but during training its role is vital: it provides a dynamic, adaptive feedback signal that guides the generator toward diverse, realistic outputs. Without it, the generator would produce averages of its training data or suffer from “mode collapse,” generating very similar outputs each time.

6. Physics-informed neural networks (PINNs)

Physics-informed neural networks (PINNs) incorporate known physical laws (often expressed as differential equations) directly into the training process. This lets them produce outputs that are more consistent with physical reality, even when data is limited or noisy, or the problem isn’t fully specified.

For example, if you’re trying to predict the price of a financial option, a regular neural network would look only at past market data and learn patterns from examples. If that data is noisy or incomplete (common in financial markets), the model might produce unrealistic predictions. A PINN still learns from data, but it’s also guided by constraints derived from the underlying mathematical principles of the system, similar to “physics rules.” These constraints help the model avoid impossible outcomes.

Beyond finance, PINNs are increasingly used for problems that resemble physical systems, like optimizing traffic flow or supply chain dynamics. Flows and interactions can be treated like fluids in pipes, letting the network leverage structured constraints to improve predictions.

Applications of neural networks

Neural networks are used across many industries to analyze large datasets, recognize patterns, and support automated decision-making.

Use cases in healthcare, finance, and technology

Neural networks are widely used in healthcare, particularly in medical imaging like X-rays and computed tomography (CT), where high accuracy is critical. These models can help analyze patient records, lifestyle data, and test results to contribute to earlier disease identification and prevention.

In finance, neural networks can help organizations with:

- Fraud detection: A 2024 U.S. Treasury announcement stated that machine learning had helped prevent and recover over $1 billion by improving the detection of check fraud. Other studies suggest that deep neural networks can improve fraud-detection accuracy and efficiency by analyzing transaction data for easy-to-miss patterns.

- Financial forecasting: A 2025 study showed that deep learning models, especially RNN-based models, are frequently used in stock and index forecasting. Models combining technical indicators, market sentiment, and fundamental data show improved performance, though results vary across studies and markets.

In technology products, neural networks support speech and language processing systems like speech-to-text systems and virtual assistants, as well as computer vision in devices and software. They’re also used in areas like traffic management, where models aim to optimize traffic flow by analyzing sensor and camera data, although real-time processing limitations are yet to be fully addressed.

Benefits of neural networks

- Data processing: Efficiently process and categorize large volumes of unstructured data, particularly useful for tasks involving images, text, audio, and video.

- Adaptability: Self-adjust internal parameters during training based on new data, making them well suited to complex tasks involving non-linear relationships.

- Scalability: Benefit from larger training datasets, and when combined with modern GPUs, they can process data volumes that overwhelm simpler models.

- Generalization: Generalize patterns and make predictions on previously unseen data, which benefits real-world applications.

- Automatic feature learning: Automatically learn useful features from raw data, reducing the need for manual feature engineering and allowing models to adapt to complex data representations.

- Versatility: Similar neural network principles often apply in different domains, including healthcare, finance, cybersecurity, and consumer technology, with adjustments to suit highly specific use cases.

Current limitations of neural networks

While neural networks have proven their usefulness and potential for improvement, there are still challenges to be addressed.

- “Black box” problem: Often lack transparent decision logic due to internal workings that can make predictions hard to interpret or trace.

- Computationally expensive: Training and maintenance can require significant computational resources and increased energy consumption.

- Large data requirements: Effective training often requires large datasets, which introduces questions about privacy and data collection.

- Overfitting: Risk of overreliance on training data, reducing a network’s ability to perform well on new, unseen inputs. This can occur when training datasets are small or when models are overly complex.

- Bias and fairness risks: Risk of reflecting or amplifying biases present in training data, which may lead to unfair or uneven outcomes.

- Difficult model tuning: Creating and tuning neural networks often involves many parameters and architectural decisions, which demands expertise, experimentation, and computational effort.

How neural networks are trained

Training is essential to designing effective neural networks that are adaptable, scalable, and versatile. The quality, size, and bias of the training data are correlated with a model’s accuracy and practicality in real-world applications.

Step-by-step overview of the training process

Training a neural network can be summed up in a few steps:

- Data preparation: Researchers gather and structure a dataset and create inputs and labels based on it. These will be used to help the model correlate specific inputs to specific outputs.

- Network initialization: Before training begins, network parameters are defined, such as weights and biases, and the number of layers and connections.

- Forward pass: The input data is fed into the network from the input layer to hidden layers and to the output layer, where a prediction is formed.

- Loss calculation: The resulting prediction is compared to the correct label using a loss function, which measures how much the model has deviated from the expected target value. In simple terms, it calculates how far the model’s answer was from the truth.

- Backpropagation: Through backpropagation, the error is sent back to the neural network, which calculates how much each bias and weight has contributed to the error.

- Parameter updates: Using the data obtained through backpropagation, the network automatically updates its parameters to minimize future errors.

- Repeated training cycles (epochs): The processes of forward pass, loss calculation, backpropagation, and weight update are repeated many times during the training process. Each epoch is one complete pass over all the examples in the dataset. Repeating these cycles allows the network to gradually improve its performance and better learn the patterns in the data.

- Validation on unfamiliar data: The model’s performance is often validated by exposing it to unfamiliar data that wasn’t part of the training dataset (validation loss). This helps monitor generalization and detect overfitting ahead of time.

- Final evaluation and deployment: Once training is complete, researchers evaluate it on a test set to assess real-world performance, and if the results are acceptable, the model may be deployed for practical applications.

The neural network training process is a controlled pipeline where the AI does much of the work (like backpropagation and weight and bias updating), but human efforts are necessary to maintain a direction for the system.

Tools and frameworks used for training neural networks

Deep learning frameworks and libraries are essential tools for neural network training. In the AI industry, there are several frameworks that are widely used and aim to make neural network training more accessible and faster:

- PyTorch: Originally developed by Facebook, PyTorch is an open-source framework based on Python that runs on a C++ backend and has been supported by tech giants like Microsoft and Meta. Its flexible model development has gained popularity due to its dynamic computation graph, where the model is built step-by-step instead of in advance. This makes debugging and experimentation easier. PyTorch can be used to train and fine-tune large language models (LLMs).

- TensorFlow: Created by Google Brain and then improved in the newer TensorFlow 2.0, this framework focuses on scalability and production pipelines for enterprises. TensorFlow supports building, training, and deploying neural networks across different environments, from cloud systems to mobile and edge devices. Its integration with Keras (see below) further simplifies model development while supporting large-scale pipelines.

- Keras: An open-source library that provides an API for building and training neural networks, Keras is designed for ease of use and modularity. Its support for popular backends like TensorFlow makes Keras a popular choice for developers. The library is mostly used for NLP, time series analyses, and image recognition.

- JAX: Developed by Google as a high-performance computing framework, JAX has gained significant popularity in the research sector, mainly due to its function transformations (such as vectorization and parallelization), which help improve computation efficiency. Its compatibility with NumPy (an open-source Python library used for numerical computing) syntax makes it easier to integrate into real-life applications such as LLMs, vision transformers, or diffusion models (generative AI that can create high-quality data through a “diffusion” process).

- Hugging Face Transformers: An open-source library that provides prebuilt transformer-based models and tools for tasks such as text classification, translation, and summarization. The library supports multiple backends, including PyTorch, TensorFlow, and JAX, and is widely used for NLP and conversational AI research.

Ethical considerations in neural networks

Neural networks can affect people’s lives in ways that aren’t always obvious, so it matters how they’re designed, trained, and deployed.

Bias and fairness in AI models

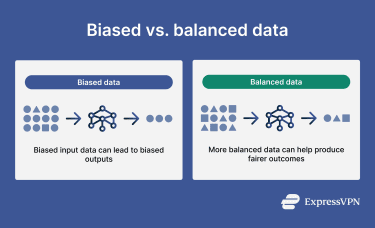

One of the most widely discussed ethical challenges in neural networks is fairness, specifically the level of bias in the training data. When datasets are incomplete or unrepresentative, the network might make unfair predictions based on stereotypes.

In real-world applications, this can reinforce existing societal biases, which can be especially significant in industries like hiring, law enforcement, or healthcare. For instance, an unfair AI-powered recruitment tool may favor one gender over the other based on historically biased data.

AI bias can emerge at multiple stages of the training process, from initial data collection to artificial bias in neurons and model deployment. The human element can give rise to bias, where the developers’ unconscious bias involuntarily impacts the model’s predictions.

A common solution to this is fairness-aware training, where developers audit datasets, measure bias using fairness metrics, and adjust training processes to reduce disparities. Bias detection tools and frameworks (such as the IBM AI Fairness 360 or Google’s What-If tool) can also help identify and evaluate potential bias before deployment.

Transparency, accountability, and explainability

Transparency tends to be a serious problem for neural networks. Many modern AI models are “black boxes,” where the developers themselves find it difficult to understand why or how the model makes a prediction.

In high-stakes industries like healthcare or criminal justice, this lack of transparency and accountability can impact regulatory compliance and trust. For example, when an AI system flags a medical image or rejects a loan application, stakeholders need to understand which factors justified the decision. That might be unfeasible if the AI model’s reasoning is unclear or left for approximation.

Explainable AI seeks to improve the transparency and accountability of AI models by making their decisions more interpretable. This can enable more effective model evaluation, faster identification of errors or bias, and better alignment with regulatory, compliance, and risk management requirements.

Privacy and data responsibility

Another significant challenge for neural network applications is data collection and the implicit privacy issues that arise from that:

- Developing and training neural networks often requires vast amounts of data collected from the internet. Inevitably, this may include personal finance data, healthcare information, biometric data, or data from social media sites, which can raise concerns, especially under data privacy laws like the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA).

- Data exfiltration attacks against AI models can also trick the system into leaking sensitive data. Prompt injection attacks, where attackers disguise malicious demands as legitimate prompts, may also lead to data exposure.

- Data leakage can arise from improper handling of the AI model at the application or system level. For example, in March 2023, the BBC reported how a ChatGPT bug allowed users to see the conversation titles of other users’ chats.

FAQ: Common questions about neural networks

What is a neural network in simple terms?

A neural network is a type of AI system that aims to simulate the structure of the human brain. It can learn patterns and make predictions without explicit programming for every task.

Is ChatGPT a neural network?

ChatGPT is a large language model (LLM) based on a neural network. More specifically, it uses a large-scale transformer architecture that’s designed for processing and generating human language effectively.

What’s the difference between AI and neural networks?

Neural networks are a sub-field of artificial intelligence (AI), which is the broader field of designing and developing systems that can perform tasks often associated with human intelligence (like pattern recognition). A neural network is a specialized type of AI that uses machine learning to recognize patterns and make predictions in variable scenarios using interconnected layers of nodes called neurons.

What are the main types of neural networks?

The main types of neural networks are feedforward neural networks (FNNs), recurrent neural networks (RNNs), transformers, convolutional neural networks (CNNs), generative adversarial networks (GANs), and physics-informed neural networks (PINNs).

Which industries use neural networks today?

Industries that use neural networks today include healthcare, finance, and retail. Within these industries, they power applications like computer vision, speech recognition, facial recognition, and natural language processing (NLP).

Can neural networks learn without supervision?

Yes, some neural networks can learn without human supervision. This is often called unsupervised or self-supervised learning. Compared to traditional algorithms that use fixed instructions and written rules, neural networks are able to infer patterns and generalize predictions for unfamiliar data that they haven’t yet interacted with.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN