The hidden dangers of AI voice scams

As AI voice cloning technology advances, requiring as little as seconds of audio to create realistic replicas, voice-based scams are becoming both more frequent and sophisticated.

While voice phishing was once a niche threat, these highly believable impersonations of loved ones, executives, and authorities are now alarmingly common.

In this guide, we explain how AI voice scams work, the warning signs to listen for, and how to better protect yourself with simple verification habits. We also outline what to do if you've encountered one of these scams.

What are AI voice scams?

An AI voice scam is when a scammer uses voice cloning technology to impersonate a real person (normally a friend, family member, colleague, or business).

Psychological tactics are used to pressure the victim into taking a particular action, such as making a transfer or sharing personal details like logins.

AI-generated voice scams typically occur over phone calls or voice notes and are highly convincing. Besides using a familiar voice, spoofed caller IDs can also make the call appear legitimate.

The victim hears a voice they trust, and the request is urgent.

It might be a “family emergency” call, a voice note that claims they need to pay a bill right now, or a message that sounds like a colleague asking for access to a company asset.

How AI voice cloning scams work

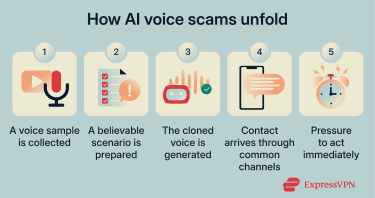

Phone call scams using AI incorporate a mix of synthetic voice technology and classic social engineering techniques. Below, we break down how these scams work.

Understanding AI-based voice phishing

AI-based voice phishing (vishing) is a scam designed to get you to hand over something valuable while you're on a call. The goal is usually an action the scammer can perform immediately, such as a one-time code, a password reset, a bank transfer, or account access.

Unlike email phishing, where you can pause and inspect a link, vishing pressures you in real time.

What makes AI-based vishing different is that it can be integrated into a broader setup. A scam may start with a normal-looking message, then shift into a call to “confirm” details, resolve a fake issue, or walk you through “security steps.” This reduces the chance that you'll stop to think, and it makes the request feel more legitimate.

The best way to recognize this type of phishing is to watch for control tactics. Vishing often includes a steady push to keep you on the line, stop you from verifying, and frame the situation as too urgent for a routine check.

If the caller gets irritated when you say you'll call back or tries to steer you away from a trusted number, treat that as a warning sign.

Voice cloning techniques and tools

Voice-cloning scams rely on the same technology used in legitimate AI voice tools. These systems begin with real audio of a person speaking, which may be recorded intentionally or gathered from publicly available sources such as social videos, livestreams, webinars, and even voicemail greetings.

What matters most is not the exact words in the recording, but how they’re spoken. Clear audio with natural variation in tone, pacing, and emphasis gives the system enough information to learn the characteristics that make a voice recognizable. Modern tools can often do this with surprisingly short samples, which is why publicly shared voice recordings can be enough to create a convincing clone.

Once the audio is collected, it’s converted into numerical data. The system analyzes features such as pitch patterns, vocal tone, rhythm, pronunciation habits, and stress. At this point, speech is no longer treated as sound but as data.

These features are then compressed into what’s known as a speaker embedding: a mathematical representation of a person’s vocal identity. Because the voice identity is stored independently, it can be paired with any new message. This is what allows scammers to generate convincing calls in a familiar voice while delivering entirely fabricated requests.

When new text is introduced, the system predicts how it should sound in the target voice. A synthesis model then turns those predictions into an audio waveform, adding natural-sounding pauses and subtle imperfections. The result is speech that can closely resemble the real person, even though the audio itself was never recorded.

Some tools go a step further by enabling real-time voice cloning. Instead of generating speech from text, live audio is captured, mapped onto the cloned voice, and regenerated almost instantly. This makes it possible to impersonate someone during a live phone call, making the scam even more convincing.

The critical point is that the ease of access to this technology has made voice cloning far more accessible than most people realize.

Warning signs of voice cloning

A convincing clone can sound natural, so don’t rely on “robotic” glitches alone. Pay attention to this and what the caller is trying to get you to do.

If the voice suddenly asks for money, gift cards, crypto, one-time codes, or login details, treat it as a major red flag, especially if the request is time-sensitive. The same goes for any attempt to rush you or keep you quiet, like “don’t tell anyone” or “do this right now.”

You might also notice awkward pacing, such as strange pauses, slight delays after you ask a question, or a script-like flow that doesn't adapt when you interrupt.

While a cloned voice can match tone and accent, the details can fall apart. If the story remains vague, contains incorrect key facts, or if the caller avoids sharing specifics that the real person would typically provide, this should be a sign that something's wrong.

In some “family emergency” setups, the familiar voice is only used briefly before the scammer takes over and controls the conversation. Always pause and verify through another channel.

Real examples of AI voice scams

Real-world examples help to better understand the approaches scammers use for this, as well as the potential impact it can have:

- Family emergency scams: In one widely reported case, an Arizona mom got a call that sounded like her 15-year-old daughter pleading for help. A man then took over the call and demanded a $1 million ransom, using the cloned voice to keep the pressure on.

- Business impersonation: Fraudsters used a deepfake voice to mimic the CEO of a German parent company and convinced a manager at a UK-based energy firm to wire €220,000 (about $243,000) as an “urgent” transfer.

- Official impersonation: Public advisories describe campaigns where scammers impersonate senior U.S. officials to target their contacts. The approach often starts with messages and moves to calls, where the voice is used to build credibility and pull the target into a conversation or request.

How to protect yourself from AI voice fraud

With a familiar voice no longer being proof of identity, AI voice scams are among the most concerning AI-powered scams. The good news is that a few habits can reduce the risk, even as voice cloning becomes more convincing.

Voice authentication and verification

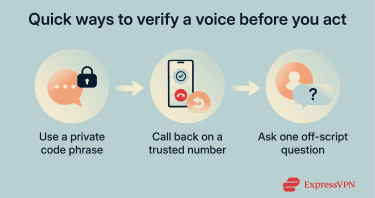

The strongest defense against this type of scam is verification that doesn't rely solely on voice analysis. If a call, voice note, or voicemail asks for something sensitive, assume verification is required.

- Use a private verification phrase: Families, close friends, and even small teams can agree on a simple phrase or question. In a real emergency, a genuine caller can answer it easily. A cloned voice cannot. This approach is recommended, as it reliably breaks the script.

- Call back using a number you trust: If a request is unexpected, hang up and call the person or organization using a saved contact or an official number from a trusted website. Do not use a number provided during the call.

- Ask questions that force unscripted answers: A cloned voice can repeat prepared lines, but it struggles once the conversation goes off track. Asking about something that isn't public, like a recent shared event, can quickly expose the scam.

Preventing misuse of your voice and identity

These scams show that public audio, including videos, livestreams, and voice notes, can be reused. Here are a few practical ways you can better protect yourself from AI identity theft:

- Share less personal context publicly: Voice scams often pair a cloned voice with details pulled from social media, travel posts, or breached data. Limiting public exposure helps control how much someone can learn about you.

- Rethink voice-as-a-password features: If a service uses voice authentication, ask what anti-spoofing safeguards it has, and consider switching to safer options where available, such as app-based approvals, PINs, or security keys.

- Stay up-to-date with developing deepfake defenses: Detection and prevention tools are improving, including work on real-time deepfake detection, audio watermarking, and verification systems to flag synthetic speech.

Reporting and legal recourse

Reporting AI voice scams matters. Reports help identify patterns and support cybersecurity investigations that span multiple regions.

A single voice call can lead to follow-up texts, phishing links, or account recovery attempts that reuse the same details, so reporting and documenting what happened is part of basic cybersecurity hygiene, not an overreaction.

Start with your local police or national fraud reporting body, and share call times, numbers used, the scenario presented, and any financial or data exposure.

Different countries have their respective cybercrime portals or specific government agencies where victims can report incidents. For example:

- The U.K.: Report scams to Action Fraud.

- Canada: Report to the Canadian Anti-Fraud Centre.

- The U.S.: File reports through the Internet Crime Complaint Center at IC3.gov and consumer fraud portals that track voice phishing and deepfake-related scams.

If there's a risk of financial loss, contact your bank immediately. In some cases, fast reporting can limit losses or prevent follow-up fraud.

Wider impacts of AI voice scams

AI voice scams are not just another phone scam. A 2023 global survey of 7,000 people found that one in four adults had either experienced an AI voice-cloning scam or knew someone who had, which suggests these schemes are reaching ordinary people, not just rare headline cases.

They are spreading as voice cloning tools become easier to access and more convincing, increasing the likelihood of encountering them in routine calls, voice notes, and follow-up messages.

For a long time, “I know that voice” was a shortcut to trust. As voice cloning becomes harder to spot, that shortcut breaks, and verification starts to look more like a normal step than an awkward exception.

This creates pressure on the legal system. Fraud and impersonation laws already apply, but many places are still working through how to handle AI-generated impersonation at scale, including more explicit rules against impersonation scams and stronger ways to prove authenticity.

Courts and legal experts are also discussing how to evaluate deepfaked audio and video as evidence and how to address the risk that genuine recordings could be dismissed as fake. That is a new kind of problem. A voice can be used as a weapon and later treated as deniable, even when real harm was done.

For businesses, the impact is also procedural. A convincing voice can undermine informal approvals, “quick confirmation” calls, and voice-based authentication. That is why deepfake-enabled fraud is pushing more organizations toward stricter sign-off rules and multi-person verification for payments and access, especially after high-value cases showed how far a familiar voice can move someone in the moment.

Work is underway on methods to prove authenticity, including techniques such as audio watermarking and stronger verification systems for recordings. The challenge is that as detection improves, voice generation improves too, so it becomes an ongoing back-and-forth rather than a one-time fix.

FAQ: Common questions about AI voice scams

What is an AI voice scam?

An AI voice scam is an impersonation scam that uses a cloned voice to get the victim to act, whether to send money, give the scammer access to an account, or provide sensitive information. The key difference from a typical phone scam is that the voice is often a replica of someone you actually know.

How to tell if someone is using an AI voice?

You usually can’t tell it’s an AI voice just by listening, given how accurate these replica voices are. As such, watch for warning signs, such as requests for one-time codes and social engineering tactics, like pressure to act fast or to keep it secret.

If anything feels off, verify through another channel, such as calling back a saved number, using a private code word, or confirming over text.

What should I do if I receive a suspicious voice message?

Don’t reply, don’t send money, and don’t share codes or passwords. Enable defenses by verifying, either by calling back using a saved number or by verifying through another channel. If you engaged in any way, report the incident, and if you fear financial loss, immediately contact your bank.

What are the legal consequences of AI voice fraud?

Using a cloned voice to deceive someone for money or access generally falls under existing fraud, impersonation, and identity-theft laws, even if “AI deepfakes” aren’t named in every statute.

What is audio watermarking?

Audio watermarking is a technique used to embed hidden, machine-readable signals directly into an audio file at the time it’s created. These signals are inaudible to people but can later be detected by software to indicate that the audio was generated by an AI system, when it was created, or which tool produced it. Because the watermark is built into the sound itself rather than stored as removable metadata, it can survive common changes like compression or re-encoding.

In the context of AI-generated speech, audio watermarking helps platforms and investigators distinguish synthetic audio from real recordings and assess authenticity after the fact. While it doesn’t prevent misuse or protect someone during a live phone call, it can support fraud investigations, content moderation, and future verification systems by providing evidence that an audio clip is AI-generated rather than human-recorded.

Take the first step to protect yourself online. Try ExpressVPN risk-free.

Get ExpressVPN